Remote Visualization with Paraview

Run the remote paraview server

For the purpose of this tutorial, we will run the job on the Okeanos cluster.

# allocate resource for the job

username@okeanos-login2:~> salloc -N1 -n8 --qos=hpc --partition=okeanos --account=GXX_YY --time=08:00:00

salloc: Granted job allocation 879513

salloc: Waiting for resource configuration

salloc: Nodes nid00XYZ are ready for job

# run an interactive job

okeanos-login2 /home/username> srun --pty /bin/bash -l

# load required modules

(base) username@nid00XYZ:~> module load common/go/1.13.12

(base) username@nid00XYZ:~> module load common/singularity/3.5.3

# laund paraview server within singularity container

(base) username@nid00XYZ:~> singularity run /apps/paraview-singularity/pv-v5.8.0-osmesa.sif /opt/paraview/bin/pvserver

WARNING: Bind mount '/home/username => /home/username' overlaps container CWD /home/username, may not be available

Waiting for client...

Connection URL: cs://nid00XYZ:11111

Accepting connection(s): nid00XYZ:11111

Warning

Please, replace nid00XYZ with the node number on which your job is running.

On your local computer

Download ParaView-5.8.0-MPI-Linux-Python3.7-64bit.tar.gz from https://www.paraview.org/download/ to your local computer.

Please, download exactly the version mentioned above.

Create a ssh tunnel to the computational node on which the paraview server has been launched.

ssh -L 11111:nid00XYZ:11111 -v -J hpc.icm.edu.pl okeanos

Launch Paraview

Extract ParaView-5.8.0-MPI-Linux-Python3.7-64bit.tar.gz and launch the paraview.

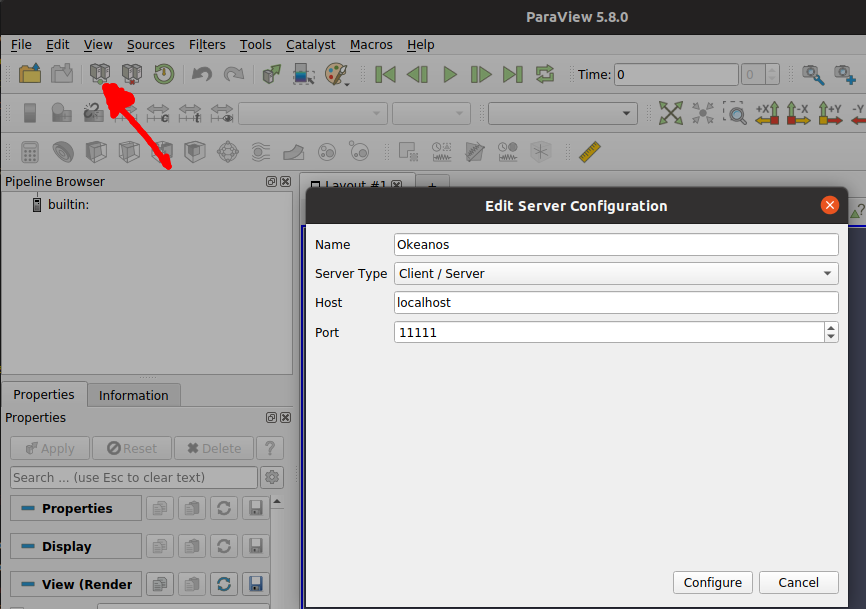

Configure

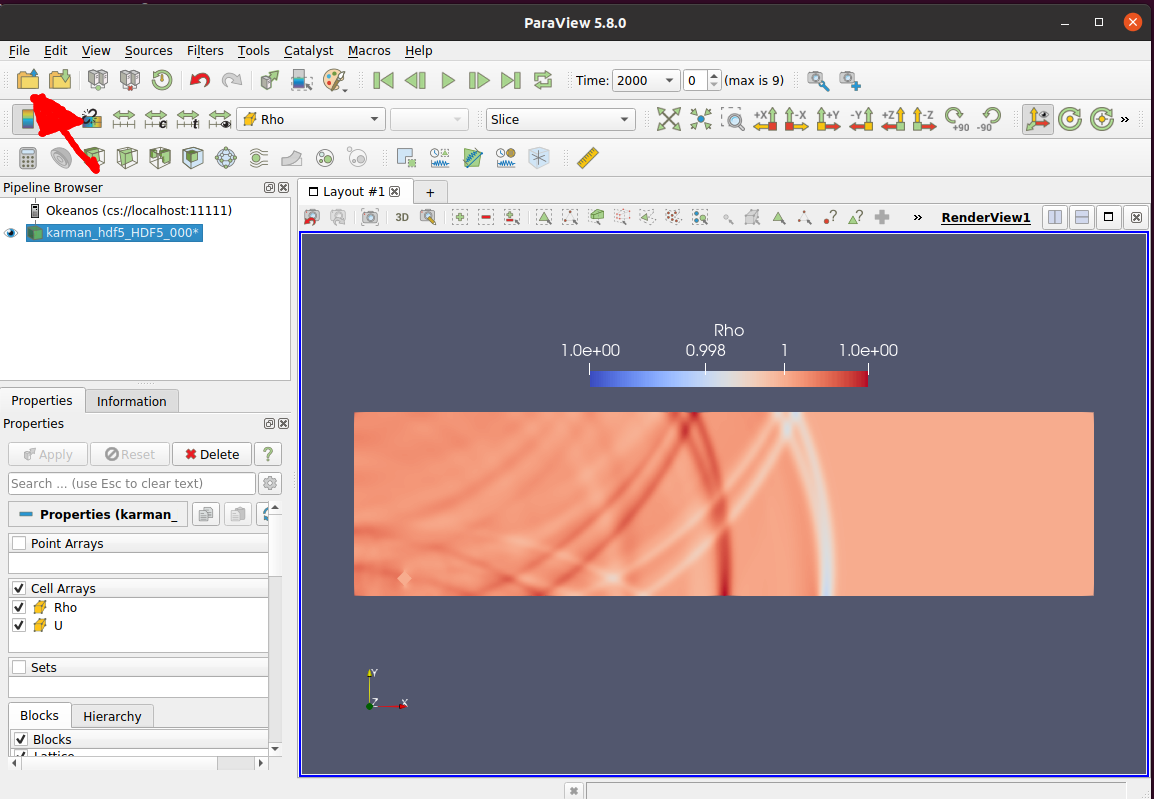

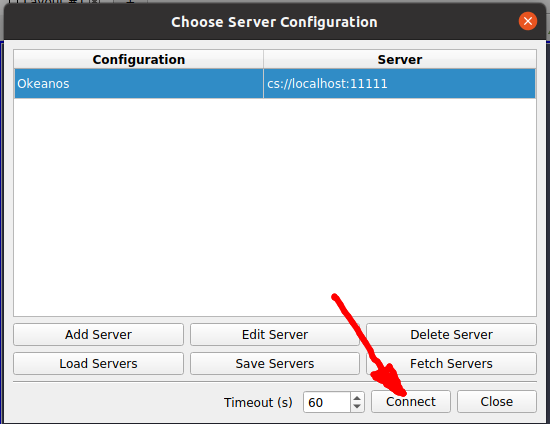

Connect

Open files